MONDAY, 6 APRIL 2020

We are in the midst of an artificial intelligence revolution. This might come as a surprise, since we have been trained by science fiction to expect an artificial intelligence revolution in the form of malevolent automatons taking over our streets. In reality, the artificial intelligence (AI) sector has been growing exponentially in the last decade, with increasing computer power and the expanding ubiquity of big data allowing AI systems to seep ever further into our everyday lives.

Artificial intelligence uses range from the seemingly benign - predictive text and route-finding - to the potentially sinister, with facial recognition software deployed in public spaces and algorithms being used to advise on prison sentences. At the moment, even the most adept AI systems are far from reaching human-like intelligence. With the swift progress in machine learning research however, computers can tackle increasingly difficult and nuanced tasks. With the potential for the creation of virtual life on the horizon, it is necessary to question what kind of intelligence we are creating.

In 2016, Microsoft unveiled a Twitter chatbot, named Tay, that had been ‘taught’, using public data, to partake in conversations by Microsoft’s Technology and Research team. Part social research project, part AI research project, Tay was supposed to learn to converse better by interacting with people on Twitter. Within a day it had grown from an innocent chat bot delivering joke horoscopes into an account that was tweeting racist and misogynistic hate speech. The account was quickly taken down, amidst much embarrassment, but it had already revealed a worrying truth: left unchecked, AI has the capacity to replicate the worst aspects of humanity. Referred to as algorithmic bias, these systems can reproduce the injustices of the world we live in. As the AI sector moves forward, we are forced to consider what impact algorithmic bias has on the algorithms we use and, crucially, who it is impacting.

How do machine learning systems become biased?

Dr Jennifer Cobbe, Coordinator of the Trust & Technology Initiative and Research Associate and Affiliated Lecturer in the Compliant and Accountable Systems Group in the Computer Laboratory, University of Cambridge, points out two principal ways in which a machine learning system might develop a bias. First, while machine learning programs learn patterns by themselves, they are still designed by humans. “The assumptions and understanding of the people designing the system will always be embedded into the system“, Dr Cobbe explains. For example, a model designer must decide whether to include certain information in the set of data that the model learns from. If these assumptions are misguided or incomplete, this may lead to the system treating a group of people unfairly. For example including gender in a model, increases the chance of the model discriminating based on that feature.

Second, these systems are often trained on historical data, which can contain evidence of historical marginalisation of groups of people. When learning from such data, the model will hence replicate any bias that is present in that data set. In 2015, technology giant Amazon experienced this first-hand with their recently developed tool for rating applicants based on their resumés. The program was trained on data from past applicants. Since the tech industry has long been dominated by men, male applicants made up the majority of the data set. This resulted in a system which was inherently biased against female applicants.

Given the severity of the problem, researchers have started looking into how to detect and possibly remove bias using statistical methods or by constraining the model. Their findings indicate that it is crucial for the model designers to ensure that their data set is balanced and their assumptions are as complete as possible to reduce bias. However, fully eliminating bias is difficult, if not impossible. The creators of Amazon’s resumé scoring system trialled removal of gender information from resumés prior to application of the model. They found the model then used information such as membership of women’s sports clubs to substitute immediate gender information and thus, still remained biased.

How avoidable is bias in machine learning models?

Many of these examples hint at a more fundamental problem with machine learning, which is that bias is inherent to any classifier. Dr Cobbe explains that models are, by definition, simplifications of a usually complex reality. “With machine learning, you are bound to use the next best model because the optimal model is computationally intractable.” This is unproblematic when classifying mundane images. However, when applied to people, this means that AI systems judge individuals based on group-level characteristics and thus their decisions result in pigeonholing. Furthermore, when the group-level characteristics are the product of an unequal society, the resulting AI can reinforce this inequality.

For example, an algorithm in the USA is being used to advise judges on defendants’ likelihood to reoffend, helping inform the severity of their sentencing. This algorithm, however, does not exist in a vacuum. with Black Americans making up 37.4% of the prison population whilst only constituting 13.4% of the general population. The algorithm picks up on this and interprets it as black defendants being more likely to reoffend, reinforcing the bias in an already-broken system. Worse, the expectation of impartiality and objectivity from an algorithm means that this bias can be more readily accepted than if it were coming from a human judge.

What can we do when some degree of bias is actually inherent to machine learning models? “While we can try to reduce the impact that bias in an AI system has, we might also have to accept that we cannot use machine learning and have to rely on people in domains where the consequences are too dangerous”, Dr Cobbe explains. This is usually the case when a machine learning system deals with people in social contexts and when its classification result can severely affect an individual’s life.

How can we manage bias in machine learning models?

Ultimately, dealing with biased machine learning systems will come down to holding those who run the model accountable, especially in cases where models discriminate based on characteristics of individuals that are protected by law, like sex, age, or race. The European General Data Protection Regulation (GDPR) that went into effect in May 2018 has provisions on automated individual decision making, giving individuals the right to not be the subject of decisions based solely on machine learning algorithms. However, Dr Cobbe argues that this law does not go far enough and burdens individuals with defending themselves against bad automated decisions. Instead, legislation should enforce “review-ability”, meaning that model designers have to create systems such that they can easily be reviewed by authorities, making legislation more easily enforceable. This might include, for example, providing access to the training data and any explicit assumptions built into the system.

With machine learning spreading into more and more fields, it is important that the creators are aware of the dangers that biased AI systems pose. Those designing machine learning models must be mindful of the risks of accidentally imparting their own biases on the system as well as ensuring their models do not reproduce bias found in real-world data sets. If we keep this in mind, we can ensure that as AI make their way into more areas of our lives, they do not bring the biases of their creators with them.

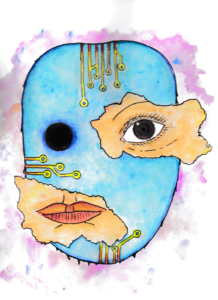

Felix Opolka is a PhD student in Artificial Intelligence at Christ's College. Evan Wroe is a Chemistry PhD student at Queen's college studying artificial photosynthesis. Artwork by Rita Sasidharan.