WEDNESDAY, 15 MAY 2013

Listen. Silence? Or the strange cacophony of ordered sound that is the latest Rhianna track or a Bach partita? If you are not currently plugged into your iPod or humming a tune, chances are you have been at some stage today. Music is the soundtrack to our lives; we use it to bring pleasure, cure boredom, connect with others, and even to approach the divine. For some it is a reason to live. What’s more, music, in one form or another, is ubiquitous across all cultures; it is part of what makes us human.And yet, coming up with an explanation of why we make music is not easy. What is it that makes us sing, go to concerts or dance at a disco? To find possible reasons, we shall look at what music really is and the effects it has on us. But starting at the beginning, we should probably ask ourselves, where did musicality come from and why did it arise in the first place?

Darwin, in The Descent of Man, described man’s musical ability as “amongst the most mysterious with which he is endowed”. Since then, the great ‘Why?’ about music has often been avoided by scientists. Evolutionary theories are difficult to test: present-day advantages of musicality are likely to be different to those of our ancestors. The psychologist Steven Pinker famously dismissed music as “auditory cheesecake”: a pleasant enough concoction designed to tickle the senses, but not of any special evolutionary importance. His claim, widely followed by scientists since, was that our capacity for producing and appreciating music is nothing more than a by- product of the brain mechanisms required for other functions, such as language. Indeed, language centres in the brain have been implicated to be involved in the ‘grammar of music’. However, we shall see that this does not necessarily mean that music is a “useless” by-product, as Pinker would have us believe, but that it might serve important functions in its own right. Music may, for example, allow communication between mother and child before true words and language are acquired. In fact, young infants already display a prodigious ability to discriminate between musical stimuli. They prefer pleasant sounds to dissonant ones, recognise tunes even when they are transposed, and express surprise when unexpected chords flout the normal rules of ‘musical grammar’.

An alternative theory to why it is we make music was proposed by Darwin and has been maintained by many musicologists since. In this theory, the purpose of music is seen as originally lying in courtship, giving musicality a selective advantage as better musicians obtain better mates. For Darwin, this explained why seemingly arbitrary combinations of pitches and beats have an all-powerful influence over the emotions: music evolved because it evoked feelings of love and attraction. However, in humans musical ability develops very early in infancy and neither sex is more musical than the other, both contrary to expectations for a sexually selected trait. Therefore, Darwin’s hypothesis is unlikely to hold true when it comes to mankind.

For animals, however, a sexually selected role for music is much more probable. Deep in the ocean, it is the humpback whale responsible for many of the haunting melodies that we refer to as ‘whale songs’. These songs tend to come from the male during the mating season so it is likely that they are used to attract a female. They are perhaps the equivalent of the showy plumage of a peacock’s tail. The same is true for the majority of bird species. Darwin noted that it is mostly male birds who sing and that they do so mainly during the mating season. He thus saw sexual selection as a critical factor in the evolution of bird song. Today, we know that the music of birds can have other purposes, for example the marking of territory. Surprisingly, not all such bird sounds are sung; some birds have been reported to use percussion instruments. For example the Palm Cockatoo breaks off a twig and shapes it into a drumstick. It then finds a hollow log, which produces the desired resonant frequency, and beats on the log by holding the stick in its foot as part of its courtship ritual. Some birds also use feathered structures to produce sounds, the most well-known example being the Common Snipe, which spreads specialised tail feathers to produce increasingly loud humming sounds as it dives from the sky.

So far it seems that sexual selection may indeed have played a role in the evolution of musicality. To some extent it is true for animals. What is more, it is believed that we find the sounds of some animals pleasing because their musical compositions hold a degree of similarity to our own. Whale songs, for example, tend to use rhythms and length of phrase similar to human music. They can last anything from between 5 to 30 minutes, suggesting that they also have a similar attention span to humans. Like us, they often create themes out of several phrases and reiterate them. Similarly, the music of birds can have parallels to human music. Birds follow the same scales as found in our music. The North American Canyon Wren sings in the chromatic scale (the 12 semitones, which make up an octave) and its trill cascades down a musical scale like the opening of Chopin’s Revolutionary Etude. The Hermit Thrush on the other hand uses the pentatonic scale (five different tones within the octave), which is characteristic of Asian music.

But is our music really so similar? Are animal songs actually music? Looking closer, birds are unable to recognise melodies that have been shifted up or down in pitch, which implies that this trait evolved after the divergence of birds and mammals. The picture becomes even clearer if we look at our closest relatives. The ability to learn complex novel vocalisations is absent in non-human primates. The tamarin monkey, while able to discriminate pleasant from dissonant or harsh sounds, shows no preference for one over the other. Rhesus monkeys fail to recognise two melodies that have been transposed by half octaves but can do so when they are transposed by full octaves. However, the monkeys lose this skill when confronted with atonal chromatic melodies. It therefore appears that tonality, the organised relationship of tones, has a special status.

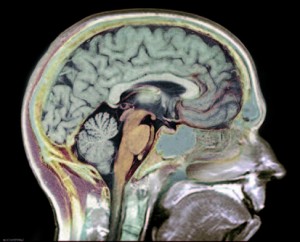

Ultimately, it is the ability to produce any kind of note and to order them into chords and melodies that distinguishes humans from animals and that allows us to actually create music. But where does this ability come from? An obvious possibility would be that it is a result of the increased sophistication of our brains. And yet, looking at music processing in the brain, we fail to find one music centre that could be the root of musicality in humans. Music processing rather seems to take place in different areas of the brain, some of which are primarily occupied with other functions, such as the cerebellum.

When we compare musicians to non-musicians in order to pin down the neurological differences, there seems to be a strong correlation between musical ability and the volume of grey matter in a person’s motor, auditory and visual-spatial regions of the brain. However, it seems that these differences, occurring in several different parts of the brain, most likely arise from changes in use of the subject’s brain and are not from any differences in innate musical ability. Interestingly, this is confirmed when looking at a musician’s brain while they are actually playing music. The overall level of brain activity of pianists playing piano is lower than that of control groups asked to perform complicated finger movements. This seems to be caused by the pianists having developed more complicated neural pathways for playing the piano from repeated practice of the task. Taken together, it appears that much of the brain processing required to perceive and play music is acquired rather than innate.

A striking neurological musical phenomenon is that of absolute or perfect pitch, where a person can recognise the exact pitch of a musical note without any reference. While research has shown that there are no differences in the auditory system and in the ability to hear particular notes between those with perfect pitch and those without, there are nonetheless signs of neurological differences. Perfect pitch seems to represent a particular ability to analyse frequency information and probably involves high-level cortical processing. Perfect pitch is mainly an act of cognition: a person must be able to correctly remember a tone, assign it a label, such as B flat, and then have a wide enough experience of hearing that pitch as to recall it when heard. There are many theories as to why some people have more of an innate ability to do this than others, such as a particular genetic trait, or their cultural or geographical upbringing. In any case, it is yet another example of the complex relationship between our brains and music.

The increased complexity of the human brain is likely to have played a major role in the development of musicality, giving us the ability to structure sound and organise it into music. This development has probably been facilitated by the ability to invent musical instruments, which we can ultimately use to produce any sound we want. But how is it possible to control the pitch of a sound? To understand that, we have to go back to the very basics and understand how sound is created in the first place.

Essentially, sound is a wave. A wave is basically a local disturbance that travels through a medium, and in doing so, transports energy from one location to another. As an example, think about the water waves produced when a stone falls into an otherwise calm lake. The energy of the falling stone is first transferred to the point of impact in the water, and then propagates away from this point through the water, producing circular waves, seen as ripples.

Looking at the physics of sound, we can break it down into three main processes: generation, transmission and reception. Sound is generated by a source, which creates some disturbance in the medium. Different sources create different sounds. In the context of music, the source can be an instrument or a voice. As an example, we’ll pick our sound generator to be one string from an acoustic guitar. Plucking the string and then letting it oscillate causes the air molecules to vibrate. Air is a compressible gas, and so the density and pressure of the air can vary from one place to another. This allows a sound wave to propagate through the air, via the alternate compressions (squeezing together) and rarefactions (pulling apart) of the air molecules, a bit like how a ripple is transported along a slinky. Our eardrum acts as a receiver, responding to the variations in the air pressure that is transmitted to it from the source.

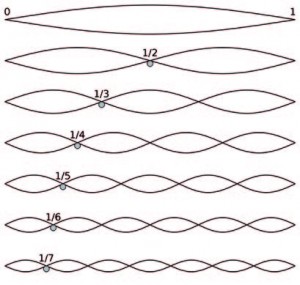

As we have seen, it is the ability to organise sound that allows us to actually create music. In order to create a melody, we have to be able to control the pitch of a note. To do that, a guitarist presses the string down onto the fretboard and the location at which the string is pressed down determines the pitch of the note. We can produce a simple picture of the pitch of the sound in terms of the frequency of the string. Let’s start with the lowest note the string can produce. This is when the full string is free to vibrate and is known as the fundamental harmonic, shown at the top of the string picture (left). We can produce a note that sounds one octave higher by pressing the string down exactly halfway along the string. This note has exactly twice the frequency of the fundamental note. If we split the string into three equal sections, each oscillating with three times the frequency of the original, we produce a note that is two octaves higher than the original note.

Finally, in music we play with consonance and dissonance of different tones. In general, we perceive an interval, that is a combination of any two notes, as consonant if it sounds pleasant to our ears. This is defined by the relationship of the frequencies of the two notes. Think about the song ‘Kumbaya’ for instance. The first two notes in that song form an interval known as a major third. The frequencies of these two notes have a ratio of 5:4. The first and the third note (the ‘Kum’ and the ‘ya’) form what is known as the perfect fifth. The frequencies of these notes have a ratio of 3:2, meaning that the upper note makes three vibrations in the same amount of time that the lower note makes two. The perfect fifth is more consonant than any other interval, except for the octave, and is often perceived as solid and pure. Played together, these first three notes of ‘Kumbaya’ make a nice and pleasant C major chord. Dissonant intervals on the other hand usually sound harsh and unpleasant. This happens for example if you press two adjacent keys on a piano at the same time. Such an interval has a much more complicated frequency ratio, for example 18:17. This makes it appear unstable and it seems to have an acoustic need to resolve to a stable consonant chord. The reason why we perceive such combinations as unstable lies within the inner ear. Here, two frequencies that are less than a critical bandwidth apart cause interfering vibration patterns in the cochlea. This makes it difficult to discriminate between the two notes. For higher notes, this critical bandwidth can lie between two and three semitones.

We now understand how we can manipulate sound and structure it into intervals, which can then be used to compose music. We also know that this ability distinguishes us from other animals. But still the question remains: why do it? Perhaps the answer lies in the effect that music can have on our minds. Understanding that we perceive certain sounds as pleasant allows us to investigate further and look at the physiological basis of such sensations.

Using brain scans, researchers discovered that listening to music triggers the release of dopamine in regions of the brain known to respond to pleasurable stimuli. This effect was seen across virtually every genre and type of music, showing that it reflects a universal reaction to music, rather than some intrinsic pleasurable quality of a particular song or tune. Nonetheless, we can all agree from our own experience that particular tunes or songs that are well known to us certainly arouse individual reactions, which can be particularly strong. This might at first seem contradictory: wouldn’t a well-known song, which by definition has become somewhat predictable, lead to less dopamine release and therefore lose its pleasurable quality? The opposite is in fact the case. Researchers have observed that increased dopamine activity in the brain begins before the subjects even hear their favourite part of the music—we begin to feel good just in anticipating what is to come. In their words, this anticipatory phase can “create a sense of wanting and reward prediction”. It leads us to expect a resolution to these feelings that are building up in us as we reach the music’s climax. Good composers and musicians can play with these expectations, changing their music in unexpected ways that keep our brains wishing for the ending that we thought we would hear. This in turn heightens the physiological response in the brain and can lead to even greater feelings of pleasure when the resolution finally comes.

Often, such pleasure when listening to music can be accompanied by dramatic physical responses, such as changes in heart rate, breathing rate, skin conductance, piloerection (hairs standing on end), and facial expressions. These physical and emotional responses, although usually transient, can occasionally be life-changing, in some cases lifting what were thought to be intractable depressive states. It would seem that music is indeed a drug, and much research goes into how it might best be used therapeutically. However, whilst the emotions we perceive in music remain fairly consistent across time, these are not always elicited in us to the same extent. Unlike caffeine or Prozac, the effect of music is highly context-specific. It seems to require an act of will to allow music to touch us and actively change our mood.

Finally, the idea that the evoked emotions are relatively fixed is perhaps the key to understanding music’s ultimate function. Dr Ian Cross, working in Cambridge, certainly believes so. He describes music as conveying “floating intentionality”. It may be communicated between people in a reliable but imprecise manner: it is nearly always obvious what sentiment a piece of music is conveying, yet almost impossible to put this into words. This could provide a means of communication that transcends language in many ways. In particular, music-mediated interaction has a lower risk of conflict than language; it is difficult to accidentally offend someone with a tune. The ultimate function of music might therefore lie in its social aspect. Musicality could have evolved under the selection pressures of increasing social organisation, with all its requirements for clever communication. This view becomes clear when we look at musical traditions across the world: song and dance are often integrated parts of daily life, used to bind groups of people to a common purpose, for example work, celebration or grief. One especially prevalent example can be found in lullabies. Nearly every culture has a type of music intended to placate infants, and there is considerable consistency in how they sound. This brings us back to the very beginning: music is universally used as a form of pre- language communication between mother and child. But what is more, it is equally important for adult communication and for emotional well-being.

In the end, it seems that there is no one way to explain why we make music. There are evolutionary reasons as well as physiological and social ones. But by understanding what music actually is, where it has evolved from, how we use it, and what effects it has on our minds and hearts, we can appreciate that among the many things that make us human, our musicality is indeed “most mysterious”. After all, music can be a soundtrack, a song, a chord, a wave, and a drug we cannot seem to live without.

Matthew Dunstan is a 2nd year PhD student at the Department of Chemistry

Nicola Hodson is a 3rd year PhD student at the Cambridge Institute for Medical Research

Zac Kenton is a 4th year undergraduate at the Depar tment of Applied Mathematics & Theoretical Physics

Elly Smith is a 2nd year undergraduate studying Biological Natural Sciences at Trinity Hall